Looking for Senior AWS Serverless Architects & Engineers?

Let's TalkFor those who are looking to shift to AWS Serverless as fast as possible, but have all HTTP servers in docker container images and have no idea how to start the migration process, here is an easy solution to lift and shift to AWS Lambda.

Hi, I'm Felipe and I've been a Serverless Expert for the past 5 years helping many clients lift and shift to AWS Serverless, as well as enhancing best practices and helping them make better and smarter decisions around their architecture.

The problem

One of the most common scenarios that I've faced is a client wanting to migrate his container HTTP servers to AWS Serverless as fast as possible. In this situation, there are two major solutions: AWS ECS FARGATE or AWS LAMDA CONTAINERS.

But when should I use one or another?

As with many aspects of software development, the choice depends on several factors. For the sake of simplicity in this discussion, I will focus on what I consider the most crucial factors for decision-making in a lift and shift scenario, discuss the pros and cons of each option, and finally decide between two AWS Serverless container services: AWS LAMBDA CONTAINERS and AWS ECS FARGATE.

To determine the most suitable service, we need to answer a few questions: What are the traffic patterns? Are they characterized by spikes, or do they remain relatively constant? What are the server web protocols? Do you exclusively utilize HTTP requests, or do you also incorporate other protocols such as GRPC and websockets?

Fargate VS. Lambda Containers

Fargate

FARGATE is an amazing tool for serverless containers that boasts better performance and cost overall for applications with constant traffic and fewer spiky loads compared to LAMBDA. It also has much more variety of web protocols and customization available such as TCP, UDP, SSH, etc.

Taking that all into consideration, if you have constant traffic with very predictable daily access, or if you use protocols other than HTTP, I would certainly recommend FARGATE.

AWS Lambda Containers

But that is not the case for most of the startups out there. The most common scenario that I've faced is startups that have developed their product using a simple Docker container with a small HTTP server that has unpredictable traffic due to their business model. They don't even know if they are going to become a unicorn in the next year and have to scale up their servers or if they are going to pivot their product. That is why they are looking for a serverless solution that can fit their needs and scale on demand without breaking the bank.

For this specific case, in a lift and shift scenario, I usually recommend using AWS Lambda Web Adapters with AWS Lambda Containers. AWS Lambda Web Adapters is a Lambda custom runtime made in Rust by AWS Labs that proxies Lambda events from HTTP requests to a real HTTP request within the container.

With that, you can have any simple HTTP server in a docker container being deployed on a Lambda environment immediately!

Hands-On!

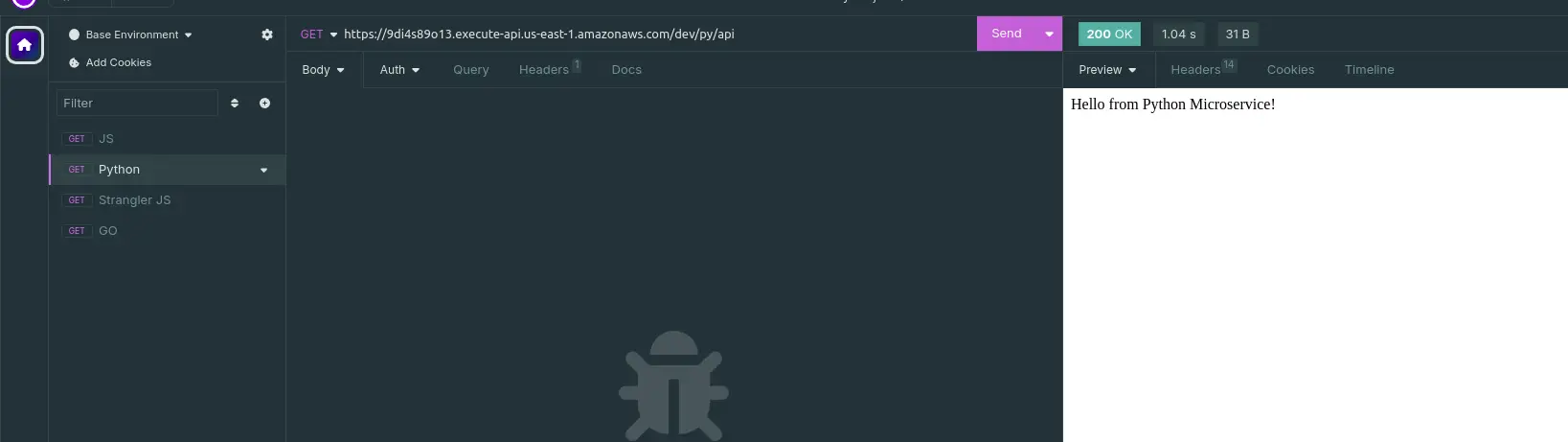

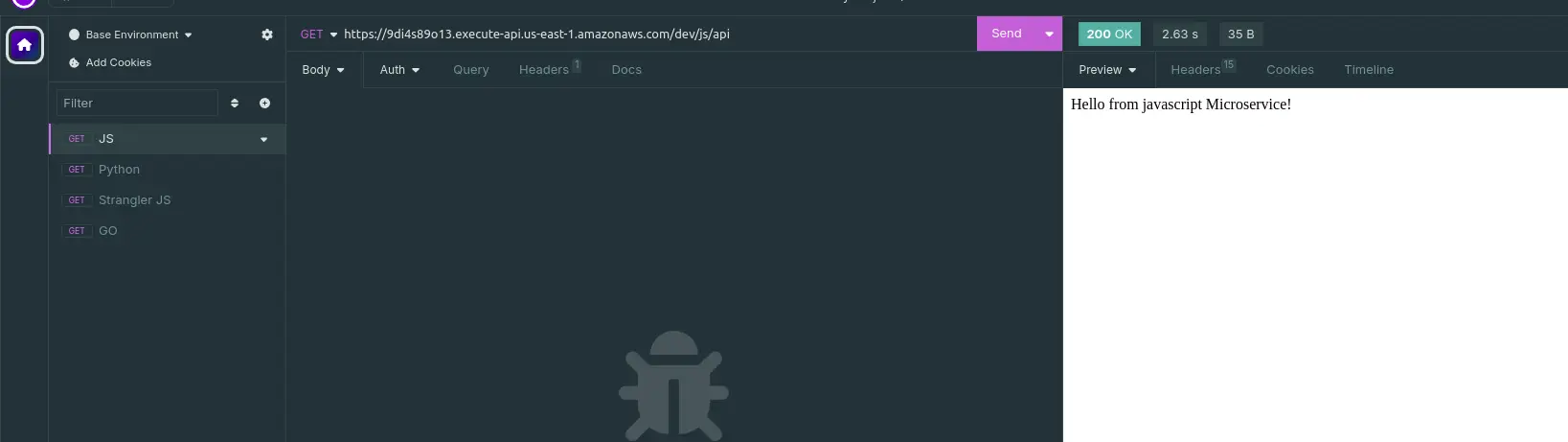

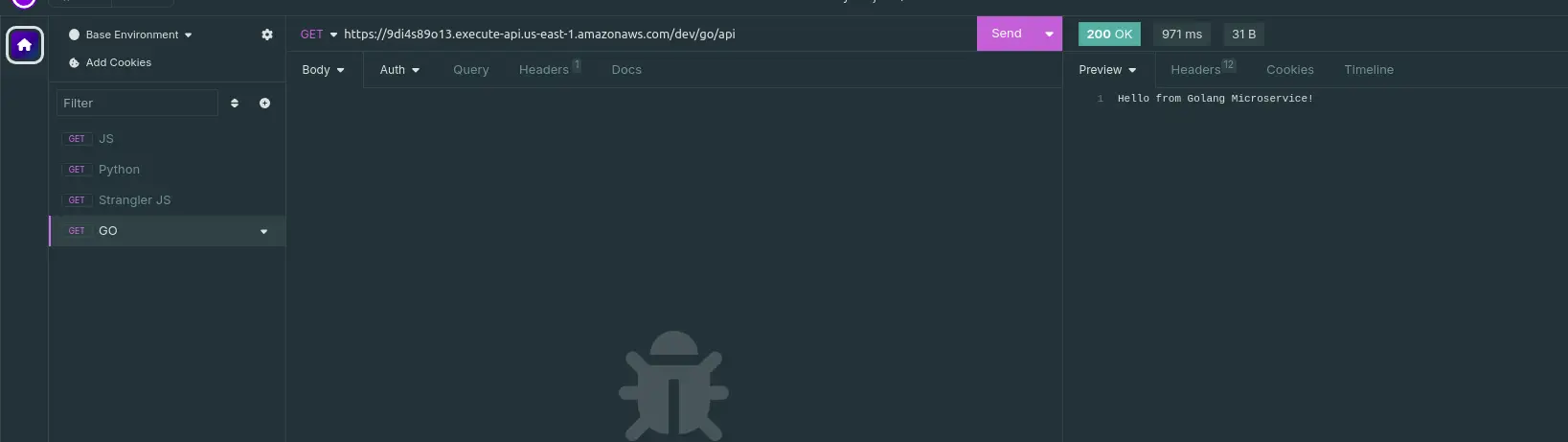

Let's give you a simple example of how to deploy three simple HTTP microservices under the same AWS API Gateway using Serverless Framework and three different languages: GO, Python, and Javascript with Node.js.

After that, I will give you the best practices and next steps for your serverless microservices using the Strangler Pattern to break down those servers into modular and more secure Lambda functions.

First, we need AWS CLI, Serverless Framework, and Docker installed. After that, we can create our serverless.yaml file.

Then we will add 3 folders, one for our GO microservice, and the other one for our Node.js and Python microservices.

.webp)

In each one of them let's create an image from their Alpine version and add the command and the Lambda Web Adapters runtime.

Python

Node.js

Go

Now let's create simple HTTP servers using each language and returning a string from the endpoint.

Python

Node.js

Go

Now let's create the endpoints for each microservice using the {proxy+} parameter for forwarding any string after the /lang/api url parameter.

Done! Now let's deploy it and see each microservice in action! Just type ‘sls deploy’ on your terminal and see the magic happening!

After the deployment by Accessing the API Gateway base URL with /py/api, we can see our Python microservice response and the same works for /js/api and /go/api.

Conclusion

We learned when and how to migrate to AWS Lambda Containers vs AWS Fargate for a lift and shift use case using docker, and AWS Lambda Adapters in many programming languages.

Next Steps and Best Practices

Now, what's next!? It is not a good practice to have lambdas with many responsibilities, permissions, or a whole microservice. We did that to help with the lift and shift process.

Now, we apply the Strangler Pattern and break up slowly each endpoint into individual lambdas, using the Least privilege pattern. It can be done by adding a new function overriding the URL for that path as shown below.

Now you have a separate endpoint in JavaScript that has its own CPU, Memory, environment, and permissions, following some of the best serverless practices. The next step is to keep doing that for all endpoints until you finish the Strangler Pattern.

That's it for this blog post folks! Thank you for your time and leave a comment below if you have any doubt!

Research/References

https://github.com/awslabs/aws-lambda-web-adapter

Install or update to the latest version of the AWS CLI - AWS Command Line Interface

Setting Up Serverless Framework With AWS

GitHub Repo with Example

https://github.com/felipegenef/simple-lambda-containers-microservices

%20(1).svg)

.webp)

.svg)