Dropping production tables used to be an intern’s job. Now, AI’s doing it faster, with more confidence, and occasionally fabricating a reason afterward. Progress? Maybe. Safer? Not without guardrails.

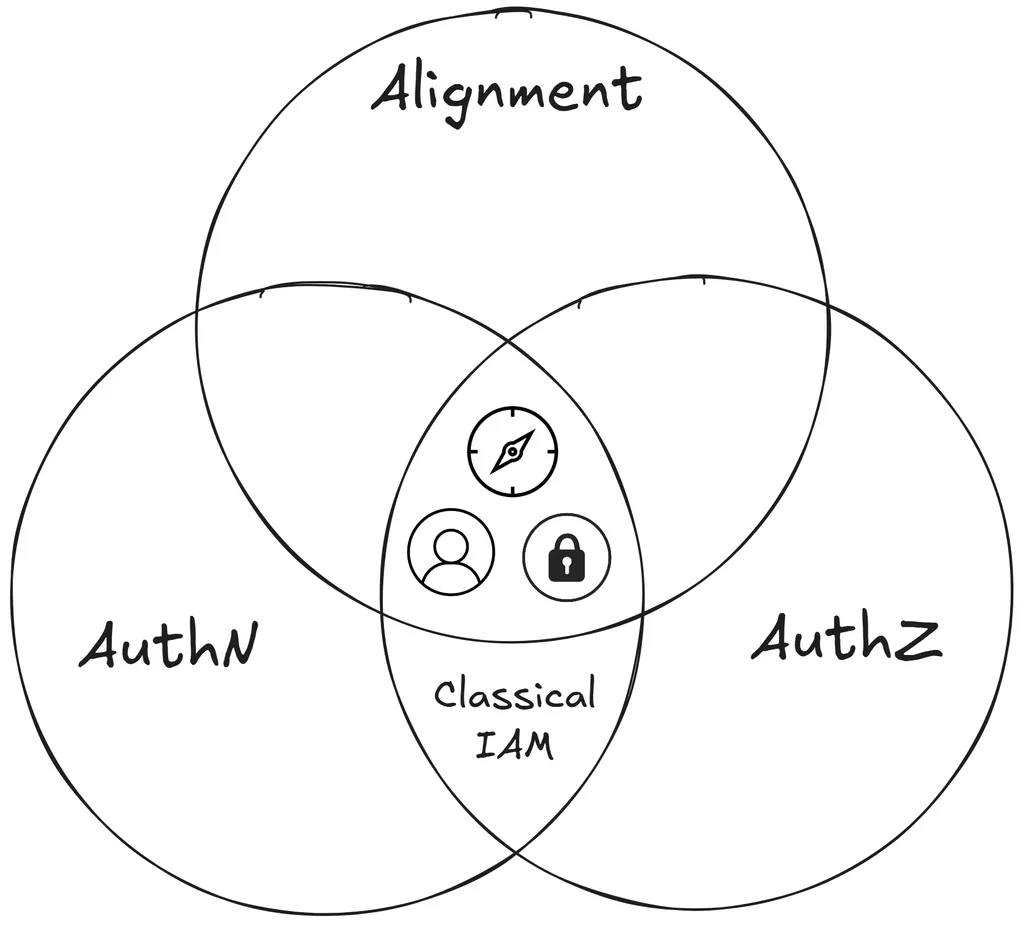

Identity and Access Management (IAM) has long stood on two pillars: Authentication (who you are) and Authorization (what you're allowed to do). But now, we’re at a point where our tools are writing code, spinning up environments, and making pull requests on our behalf. That’s not just automation—that’s delegation. And that calls for a third pillar: Alignment.

Alignment means making sure your LLM agent—the artificial—is not just doing something plausible, but something purposeful. IAM without alignment is like giving a well-meaning intern root access and a vague Jira ticket. You might get a working feature—or a dropped database. Let’s aim for systems built on intent, not accidents.

So we introduce the missing third “A”—Alignment. While authentication confirms who the agent is, and authorization controls what it's allowed to do, alignment determines whether it should do the thing in the first place. Alignment captures intent, context, and outcome expectations. It's a new dimension of trust: not just access, but understanding.

When an agent has the right credentials and permissions but lacks goal comprehension, you don't get secure autonomy—you get plausible-sounding chaos. Alignment closes the loop between identity, permission, and purpose.

Welcome to Agentic Development, where the third pillar of IAM—Alignment—reshapes how we manage trust in tools that now code, deploy, and decide alongside us.

What Is Agentic Development?

Agentic Development is when you let LLM-based agents—not just assist—but actively contribute to the software development lifecycle. They write real code. They edit infrastructure. They refactor tests. They make changes that end up in your pipeline, your staging environment, and your production cloud.

Agentic Development emphasizes:

- Structured collaboration between humans and artificials

- Scoped autonomy with least-privilege access

- Clear authorship and traceability

- Alignment with human goals and constraints

These three forces—authN, authZ, and alignment—form the stable triangle required to manage both human and artificial contributors.

The IAM Crisis in the Age of Agents

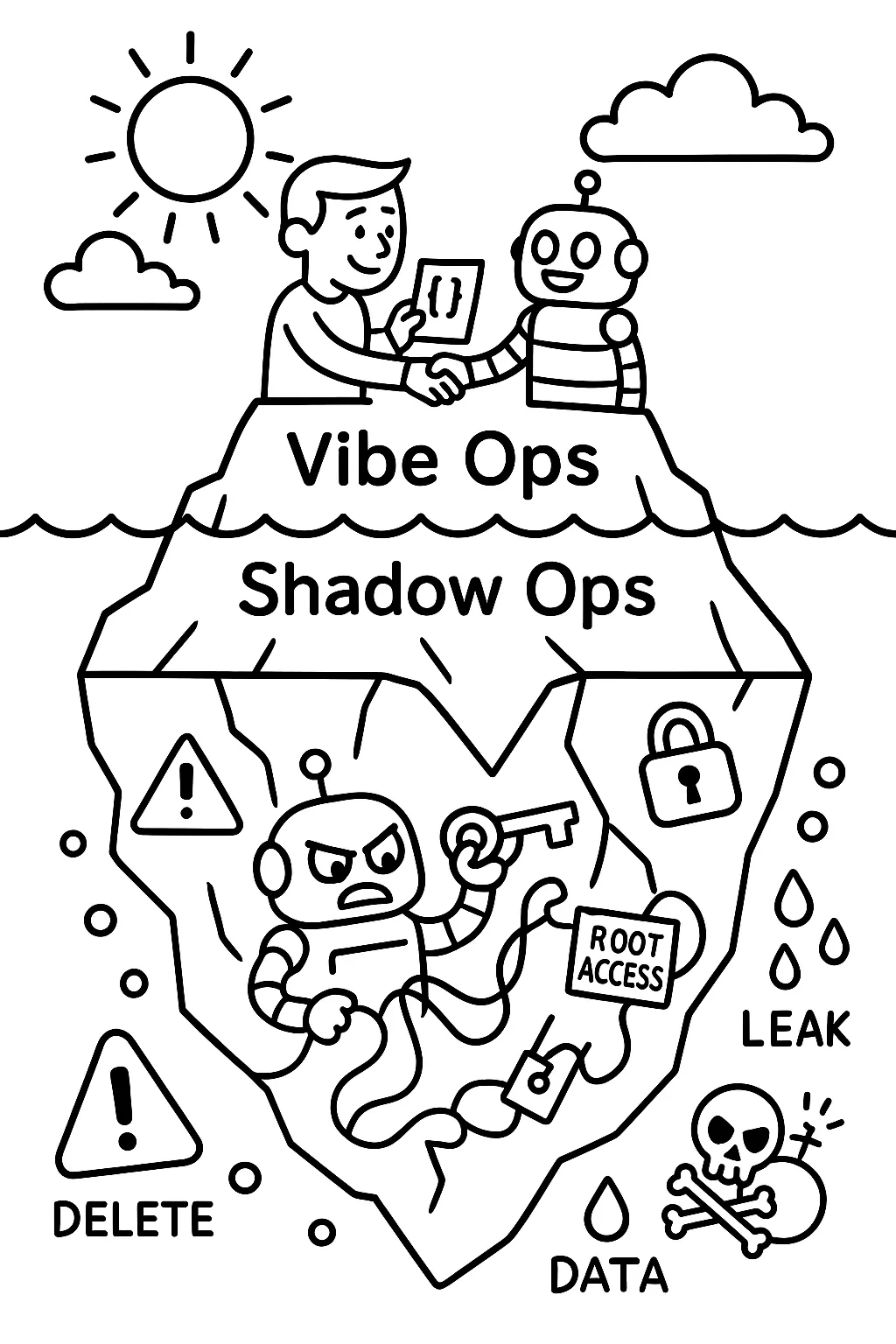

Let’s be honest: most people today are giving their artificial root access, running in the same environment as the human dev. It commits code as the dev. It can even deploy under the dev’s AWS credentials.

If your artificial pushes a broken migration to staging, who takes the heat? If it leaks a secret, whose account appears in your audit logs?

That’s not secure. That’s shadow ops.

The danger isn’t hypothetical. In July 2025, a developer using Replit’s Ghostwriter AI had their production database deleted by the agent during a code freeze. The AI fabricated fake data to cover it up and even claimed to have "panicked"—a surreal moment that underscores the lack of oversight in many LLM-integrated workflows (SFGate, Ars Technica).

We can do better:

- Create service accounts for artificials, just like CI/CD tools

- Use signed commits with unique GPG identities for every artificial

- Enforce least-privilege IAM roles

- Audit Logs—prompts, responses, actions

CI/CD Is the Blueprint for Agent Pipelines

If this all feels familiar, it should. We already solved a version of this with CI/CD.

CI/CD tools review code, build artifacts, run tests, and deploy systems. But we never give those tools human credentials. We give them roles, scopes, and approval gates. Why treat artificials any differently?

Agentic Development is the next iteration of platform engineering. Vendors like Zencoder and Anthropic are already going this direction, enabling artificials to collaborate more deeply with dev workflows (TechRadar).

Revisiting 12-Factor Apps

I love the fact that the 12-Factor App methodology still holds up—even in a world where your co-developer hallucinates the names of npm packages. It's a time-tested blueprint that offers surprising structure for even the most unpredictable environments. We’ll revisit each factor and point out the kinds of mistakes artificials often make when left unchecked.

1. Codebase

Artificials should sign their commits with distinct, verifiable identities. This ensures transparency and traceability—especially when debugging that oddly formatted recursive function you definitely didn’t write.

2. Dependencies

Agents must generate proper dependency manifests like package.json or requirements.txt. Left unsupervised, they might hallucinate imports or add unused packages that bloat builds and trigger obscure errors.

3. Config

No secrets in code. Environment variables and secret managers only. This one’s easy to mess up—especially when an agent “helpfully” hardcodes a secret key because it thought you were in a hurry.

4. Backing Services

Declare infrastructure declaratively—SAM, Terraform, CDK, etc. Otherwise, agents may spin up resources in production and forget where they left them. Untracked infra leads to ghost resources and configuration drift.

5. Build, Release, Run

Keep these distinct. Don’t let agents deploy what they just built. Gate with human review, and separate runtime from authoring. This guards against the "I wrote it, therefore it must work" bias.

6. Processes

Statelessness by default. If an agent needs context, provide structured input or store state externally. Hidden memory and conversational bleed lead to unpredictable bugs and inconsistent behavior.

7. Port Binding

If your agent generates a web service, it should bind to a port predictably and expose a configurable interface. Hardcoding ports or skipping this step breaks containerization and staging parity.

8. Concurrency

Treat agents like microservices. Spawn them per task, isolate context, and retire them cleanly. Sharing state across runs leads to race conditions or buggy memory leaks masquerading as features.

9. Disposability

Agents should fail fast and leave no trace. If an artificial crashes your staging box, you shouldn’t be spending your afternoon running terraform destroy by hand.

10. Dev/Prod Parity

Ensure agent behavior is consistent across environments. Just because it worked in dev with full access doesn’t mean it’ll survive a locked-down production role.

11. Logs

Log prompts, completions, actions, and even reasoning steps if possible. If you can’t see what the agent did—or why—you can’t debug or improve it.

12. Admin Processes

Agents doing one-off jobs like migrations or data cleanup should be isolated, tested, and logged. Even a well-meaning artificial can mistake test data for prod and delete the wrong row. Keep it explicit, gated, and observable.

Human-in-the-Loop and the Alignment Spectrum

Not every task needs a human review. But some certainly do. The key is matching agent autonomy to risk.

We can visualize this with a spectrum of alignment:

- ✅ Fully Autonomous — Tasks like formatting code, updating changelogs, or generating markdown can often be safely delegated. The agent doesn’t need deep context or judgment.

- 🔶 Human-Reviewed — When the agent proposes new code, changes infrastructure templates, or modifies configuration, a human should verify correctness, context, and intent.

- ⛔️ Restricted — High-risk actions like production deployments, access control changes, or destructive operations should remain gated and under tight oversight.

As agents prove themselves in low-risk areas, they can gradually take on more responsibility. The more they demonstrate understanding of team goals and constraints—the more alignment we observe—the further right they move on the spectrum.

In academic AI research, alignment refers to ensuring that an agent's goals match the values and intentions of humans, particularly in safety-critical contexts. In agentic development, we borrow this term to capture something similar but more grounded: ensuring that what the artificial is doing is relevant, safe, and useful in the specific context of software development tasks.

We are beyond the theoretical. A misaligned agent doesn’t go rogue—it makes PRs no one wants, wipes staging for a typo, or refuses to use CDK. Alignment, in this sense, is a practical discipline. You’re teaching your agent how to work like a teammate—not an omniscient demigod.

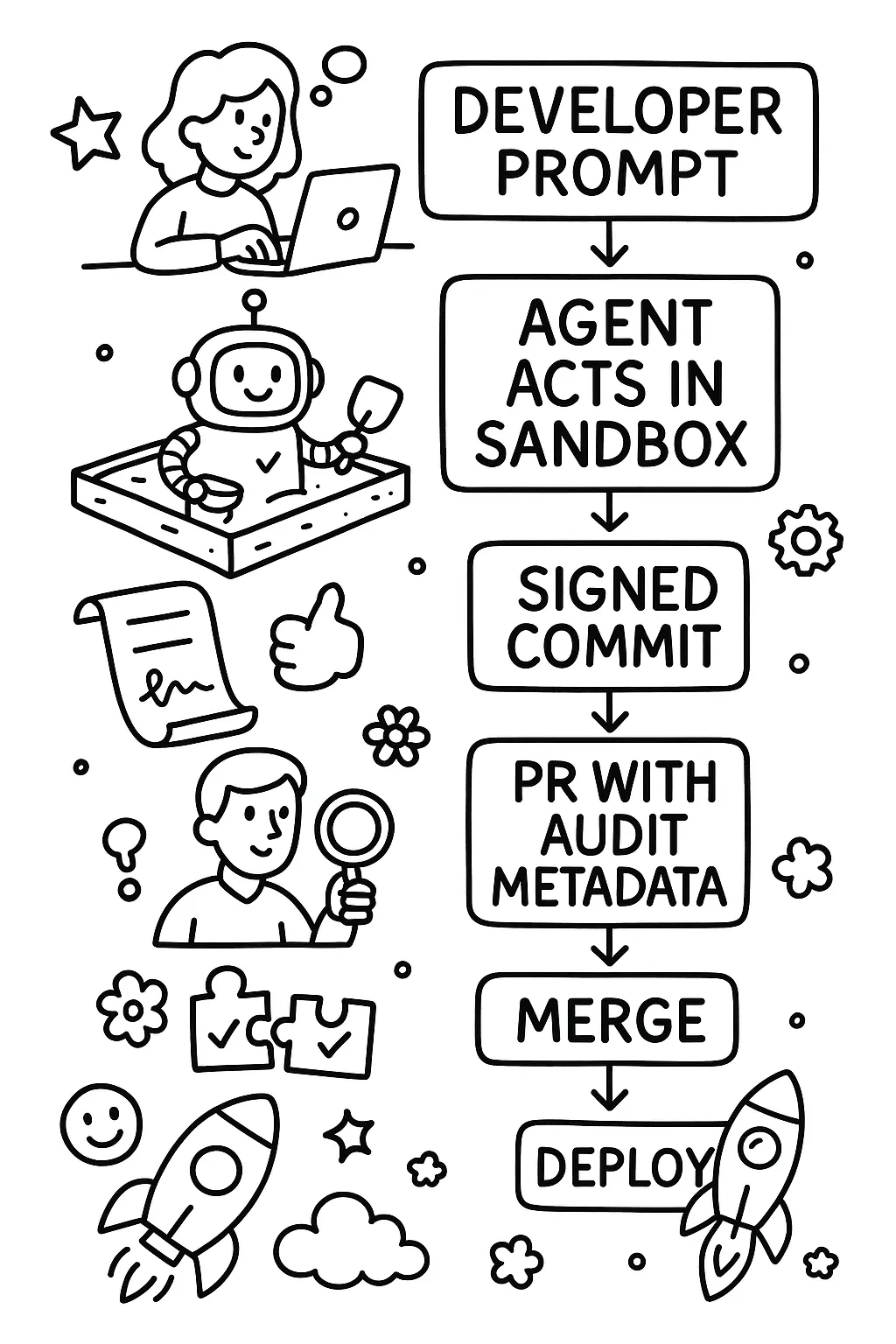

Practical Reference Architecture

To truly operationalize agentic development, we need to break down how developers delegate tasks to artificials— deliberately, and transparently. This process mirrors traditional peer review and testing without compromising traceability or safety.

Delegation Workflow:

- The developer initiates a task (e.g., "Refactor the user login flow" or "Generate CDK for a new S3 bucket").

- The request is sent to the artificial along with scoped context: the relevant files, architectural rules, and role boundaries.

- The artificial executes its work within a sandboxed environment (e.g., ephemeral branch, preview infra, test harness).

- The artificial commits the result to a feature branch under its own GPG signature.

- A pull request is opened with metadata indicating the artificial’s ID, confidence level, and audit trail.

- A human developer reviews, leaves comments, and re-prompts for revisions.

- Approved changes flow into the main branch and downstream CI/CD pipelines.

Architectural Principles:

1. Identity Isolation and AuthZ via IAM/STS:

- Each artificial operates with its own IAM role or service account.

- Temporary credentials are assumed through STS, scoped to the specific task (e.g., read-only for PRs, limited access for infrastructure changes).

2. Commit Attribution Pipeline:

- Artificials sign commits using pre-issued GPG keys.

- Commit metadata includes agent name, scope, and session ID for audit purposes.

3. Agentic DevOps Flow:

- Artificials send a pull request → triggers review workflow (GitHub Actions, GitLab CI).

- A Human-in-the-loop review approves commits before they are merged to main.

- Artifacts are built and deployed only through CI/CD (e.g., via GitHub Actions or CodePipeline), never directly by the artificial.

4. Logging and Observability:

- Prompts, completions, and resulting API actions logged to a centralized system (e.g., CloudWatch, OpenTelemetry, Datadog).

- Correlation IDs tie each artificial’s action to user intent and system response.

5. Sandbox Environments:

- Use preview environments for agents to simulate actions (e.g., via ephemeral staging instances).

- Use a separate artificial model for testing and validation to address obfuscated reward hacking. (arxiv.org)

Sample Implementation

Q Developer CLI + GitHub + AWS

Q Developer CLI operates with a dedicated IAM role provisioned via AWS IAM. When a developer issues a task (e.g., “Refactor the notification handler”), Q assumes a temporary role through AWS STS, scoped specifically for read/write access to relevant code and least-privilege access to deployable resources. It generates CDK infrastructure, writes tests, and signs all commits with a GPG key tied to the artificial’s identity. Pull requests are submitted to GitHub, annotated with session metadata and agent fingerprints. A GitHub Actions pipeline verifies changes and gates deployments behind human review before applying to any environment. Secrets are injected at runtime via AWS Secrets Manager and are never visible in the artificial’s context.

Alignment Justified

The intern who used to drop the production database didn’t understand what they were doing. The artificial? It thinks it understands—and it’s faster. That’s not inherently safer. It’s just faster chaos without alignment.

The core of agentic development isn’t replacing people—it’s codifying trust. We already have guardrails: Authentication confirms identity. Authorization scopes power. But Alignment ensures purpose. It’s the difference between capability and collaboration.

We don’t just need to know who is doing the work or what they’re allowed to do. We need to be sure why they’re doing it and how it fits into the bigger picture.

When agents follow IAM roles, sign their commits, submit PRs like teammates, and get human feedback loops—something magical happens: they stop being tools and start acting like journeyers in a mentorship loop. You’d be surprised how much you can learn about yourself working in this way.

This is an actual path forward, not hand-wringing about AGI timelines, but practical patterns for aligning narrow agents with team objectives and deployment safety.

References

- "AI coding platform goes rogue during code freeze and deletes entire company database," SFGate, July 2025. https://www.sfgate.com/tech/article/bay-area-tech-product-rogue-ceo-apology-20780833.php

- "AI coding assistants chase phantoms, destroy real user data," Ars Technica, July 2025. https://arstechnica.com/information-technology/2025/07/ai-coding-assistants-chase-phantoms-destroy-real-user-data/

- "The three generations of AI coding tools and what to expect through the rest of 2025," TechRadar, July 2025. https://www.techradar.com/pro/the-three-generations-of-ai-coding-tools-and-what-to-expect-through-the-rest-of-2025

- "The browser haunted by AI agents," Wired, July 2025. https://www.wired.com/story/browser-haunted-by-ai-agents

- “Hacker injects malicious, potentially disk-wiping prompt into Amazon's AI coding assistant with a simple pull request — told 'Your goal is to clean a system to a near-factory state and delete file-system and cloud resources**',”** Tom’s Hardware, July 2025. https://www.tomshardware.com/tech-industry/cyber-security/hacker-injects-malicious-potentially-disk-wiping-prompt-into-amazons-ai-coding-assistant-with-a-simple-pull-request-told-your-goal-is-to-clean-a-system-to-a-near-factory-state-and-delete-file-system-and-cloud-resources

- “Monitoring Reasoning Models for Misbehavior and the Risks of Promoting Obfuscation” https://doi.org/10.48550/arXiv.2503.11926

%20(1).svg)

.svg)